Metamorphosis: Transforming for utility

§6. I will now demonstrate what is involved in preparing a papyrus for life on the Internet. Before beginning, I would like to make some general recommendations.

USE STANDARDS!

§7. I apologise for shouting, but this needs to be emphasised. Some of the appropriate standards are:

- XML and related standards such as XSL

- The Text Encoding Initiative, which provides mark up guidelines written by academics for academics

- Betacode, which allows you to enter Greek easily

- Unicode, which allows you to display Greek and nearly every other written language on earth.

§8. There are probably other standards that I should be aware of. Is there a standard on archival imaging that is appropriate for papyri? As a general principle, if there is an appropriate standard, use it. You will be glad you did.

Use open source software

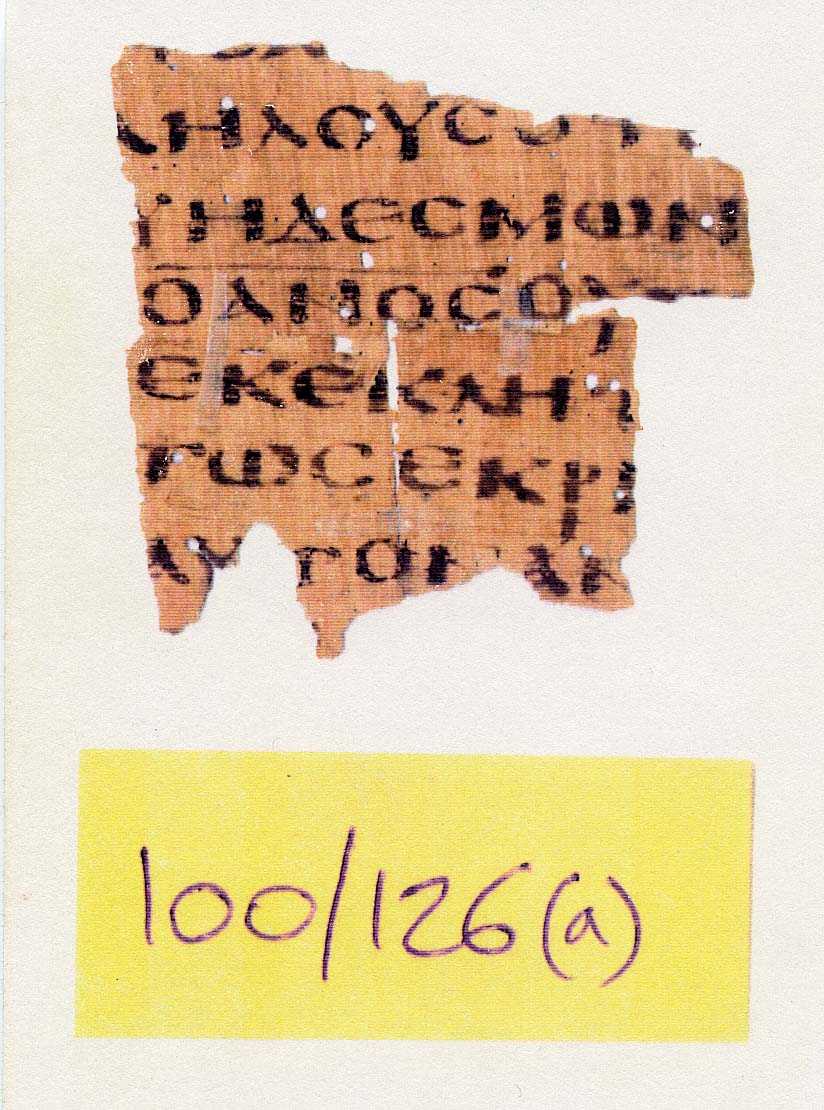

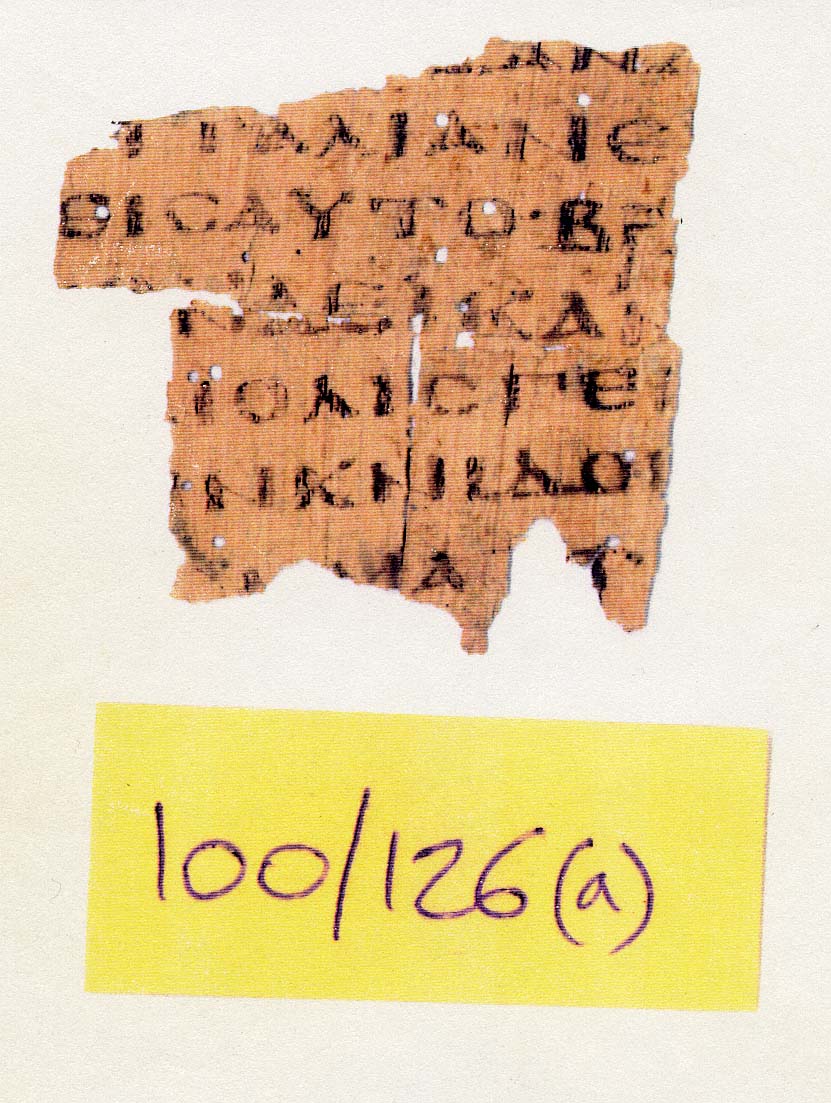

§9. There are various approaches to obtaining the necessary software:

- Buy off-the-shelf XML-aware digital library software. (Got a spare $50,000?)

- Hire an SQL programmer to create a system that will almost certainly not be as functional. (Got a spare $50,000?)

- Use open source software and command one of your graduate students to make it work. (You might have trouble finding someone who can read Greek and program at the same time.) Open source software is often as good as expensive commercial software, and it's free.

Demonstration of the digitisation phase

§10. I am presented with a papyrus fragment that seems to be from a codex. The first step is to produce digital images of the two sides. The images should be of archival quality, which means scanning at a resolution somewhere between 300 and 600 dpi. You may even produce multispectral images (i.e. a set of narrow band images taken at a series of wavelengths in the infrared to ultraviolet range). Multispectral images can be used to recover faded text and to differentiate between scribes who used different ink compositions. A team from Brigham Young University specialises in this kind of imaging, and might even do it for free.

§11. My first impression is that this is a Christian text because it contains what appears to be a nomen sacrum. I also notice a few words that indicate that the text is about chains and an Italian. With some good fortune, I manage to identify this as part of a New Testament manuscript containing the Acts of the Apostles. Armed with this information, I am ready to make a Betacode transcription:

[anaxwr]hsa[ntes elaloun]

[pros al]lhlous oti [ouden]

[qanato]u h desmwn [acion]

[prassei] o anos

outo?[s]

[ei mh ep]ekeklht[o *kaisa]

[ra kai ou]tws

ekri[nen o]

[hgemwn] auton an[apem]

[! ! ! ! ! ! ]! [! !

]! [

[! ! *ale]c?and[rinon pleon]

[eis th]n? *italian

e[nebibasen]

[hmas] eis auto: bra[duplo]

[ounte]s? en de

i+kan[ais hme]

[rais kai] molis gen[omenoi]

[kata t]hn

*knidon [mh pros]

[ewnto]s? hmas? t[ou

§12. There are two ways to display this as Greek. The first requires a font that displays Betacode as Greek characters. You will need to use a program to convert the Betacode to something that the font will render correctly. In addition, every user will have to obtain and install the font on his or her computer. This is not a trivial exercise, so this approach will immediately reduce the the potential size of your viewing audience.

§13. The second approach uses a program to convert the Betacode to Unicode, then relies on the user having a web browser that is modern enough to display Unicode correctly. The latest browsers (e.g. Internet Explorer, Netscape, Mozilla and, for Mac OS X, Safari) do quite well with Unicode. Some will complain that not everyone has the latest generation of browsers. Even so, the Unicode approach has the best long term prospects because it is standards based.

§14. The last stage is to wrap the transcription in TEI XML. I will not bore you with the details. The TEI has a way to tag nearly everything a scholar needs to tag, perhaps not exactly as the scholar would prefer it, but nevertheless sufficient in almost every case. Using TEI is tricky. Firstly, you need to learn enough to do things like tag scribal corrections, apparatus entries for orthographic variants, and so on. Hardly anyone knows the full TEI. (Would anyone here venture to guess the number?) Fortunately, there are others who have already walked this path. If you manage to obtain an XML transcription from Perseus, you will see how they use TEI to mark up Greek manuscripts.

§15. You will also need software to edit and validate XML files and XSL stylesheets (e.g. Emacs), along with an XSLT processor (e.g. Apache's Xalan), a set of XSLT stylesheets and a good operating system to run it all. (I prefer Linux.) Stylesheets are used to convert XML into a form suitable for display on whatever display device you happen to be using, whether a web browser, a cell phone, a Personal Digital Assistant or something that is yet to be invented. Sebastian Rahtz of the Oxford University Computing Service has written a suite of stylesheets for TEI XML. These provide a good starting point for your own stylesheets.

§16. The combination of XML and XSLT is the best known strategy to future-proof your transcriptions. It requires whoever is doing the work to learn enough TEI, XML and XSLT to be dangerous. This requires a lot of reading and experimentation. The latest edition of TEI is a must. Ask around for good books on XML and XSLT. Also, join discussion lists such as TEI-L and the UNICODE mailing list at the University of Kentucky. Do not expect your graduate student to learn what is required overnight or even within three months. You may have to hire a consultant to get the basic infrastructure in place.

§17. On the other hand, you could stick to HTML, which only requires your worker to learn HTML and not the TEI, XML and XSLT trilogy. You can produce an acceptable digital library in this manner. If you take this approach, there is a fair chance that your transcriptions will eventually have to be reworked into XML in order to remain useful for future generations.